Compiling libaam.so for EnergyXT2 on 64-bit

by admin on Jan.27, 2011, under IT Adventures, Linux

Recently I decided to revive my interest in playing the guitar. I took a few lessons back around 1988, but didn’t stick with it. Over the years my desire to pick it up again has cropped up here and there, but either I was too busy with life and college, or my guitar was in another state, or whatnot. However, I was recently reunited with my guitar, but not my old amp. I decided to see how I might use my Linux box to allow my to play through it, and perhaps have some effects processing as well. I quickly discovered the rich extent of audio tools available for Linux, and even for the guitar specifically. I hit a gold mine.

I quickly found several USB sound cards with 1/4″ jack inputs, which were well supported under Linux, were readily available – and at a modest price. I ordered a Behringer UCG 102 off Amazon for all of $29.

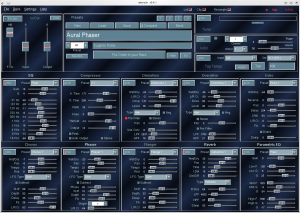

I also discovered Rakarrack, an full featured guitar effects processing suite for Linux. I had no issues getting Rakarrack installed, and anxiously awaited the arrival of the new sound card. If you play the guitar, you really have to check out Rakarrack – it is amazing.

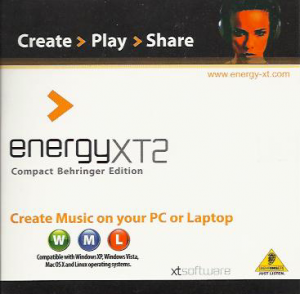

When the Behringer arrived I was shocked to discover the EnergyXT2 recording software it came with nativity supported Linux. Displayed right on the CD sleeve was a an nice capital “L” and advertised Linux support:

This, of course, I would have to try – simply as a matter of principle. I soon discovered however several issues with this software, and my solutions to them are below.

NOTE: I should say however that the Behringer Guitar to USB interface worked flawlessly, and in short order I had connected my guitar, fired up the Jack audio server, launched Rakarrack and was playing. The effects provided by Rakarrack include: distortion, chorus, reverb, flanger, digital delay and many others. Wow – this is like having an array of effects pedals for free, and they sound great. And it should be mentioned as well that the open source Ardour recording software worked without requiring anything beyond finding and installing the RPM. So . . . why even bother with getting EnergyXT2 to work? Well, because it’s there. I loved the idea of getting Linux software with a product I bought, and by God I intended to see it work.

Do you need to do this too? Well, that’s up to you. You can certainly use Ardour to record with, and it works extremely well. But if you want to try out EnergyXT, and use it with Jack, and with MIDI, and especially if you are running 64-bit – then read on.

If all you want is a basic working EnergyXT2 without Jack support, that should not be too difficult:

0) First – you need to download the latest version of EnergyXT2 for the website. The version which was shipped has a bug which caused it to fail returning:

X Error of failed request: BadImplementation (server does not implement operation)

Major opcode of failed request: 139 (MIT-SHM)

Minor opcode of failed request: 5 (X_ShmCreatePixmap)

Serial number of failed request: 382

Current serial number in output stream: 384

X Error of failed request: BadDrawable (invalid Pixmap or Window parameter)

Major opcode of failed request: 55 (X_CreateGC)

Resource id in failed request: 0x2a000a4

Serial number of failed request: 383

Current serial number in output stream: 384

This is easily solved by downloading the updated version. You might want to grab the manual too.

At this point it should start and work correctly, but you will not have Jack support. For that you need to continue with the next steps. This assumes some familiarity with compiling things, obtaining required libraries, and some basic debugging – it is not really difficult, but if you have not compiled things much it may be a learning experience.

1) The version of libaam.so provided with the software has several limitations, the most significant of which is that it does not support the Jack audio server. Now – there are two routes to take here:

A) Download the source for libaam.so from the EnergyXT libaam site and compile using the instruction there, or:

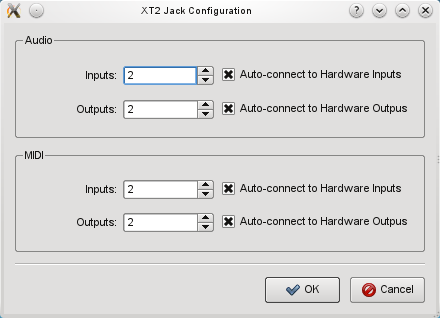

B) Download and compile the source for from the libaam Jack Sourceforge project. This is most certainly the better route to take as it supports more MIDI channels and also can build a QT Interface to configure it to work with Jack. Simply download, untar and continue with the steps below. Note this contains its own readme and make file, and QT interface, unlike the basic version for the EnergyXT site.

2) Next, I was building this on a 64-bit OpenSuse 11.3 system. The fact of building this on a 64-bit platform introduces a number of additional factors which have to be addressed. If you were to build this on a 32-bit platform, it would be easier. If using a 64-bit environment (perhaps like Studio64) the first thing you need to do is ensure you have the 32-bit versions of the libraries you will need, AS WELL AS 32-bit versions of gcc, glibc, etc. These are going to be called different things depending on your distro, but you are looking for things like:

libasound2-devel

libjack-devel-1.9.5-2.8.i586 (get 32-bit version from webpin)

alsa-plugins-jack qjackctl

libqt4-devel-4.6.3-2.1.1.i586

alsa-devel-1.0.23-2.12.x86_64

glibc-32bit-2.11.2-3.3.1.x86_64

gcc45-32bit-4.5.0_20100604-1.12.x86_64

gcc-c++-32bit-4.5-4.2.x86_64

And maybe . . .

libSDL_mixer-1_2-0-32bit

libSDL_mixer-1_2-0

Should you receive any build errors complaining about libs you know you have, may need to make some symlinks for several of the libraries, such as:

sudo ln -s /usr/lib/libasound.so.2.0.0 /usr/lib/libasound.so

sudo ln -s /usr/lib/libjack.so.0.1.0 /usr/lib/libjack.so

sudo ln -s /lib/libgcc_s.so.1 /lib/libgcc_s.so

Getting all the 32-bit libraries you need may take a few tries – each time you compile you may be presented with some new library needed – but you should be able to work through them and fortunately there are a limited number required.

If you do not have 32-bit gcc, you may get messages similar to:

paracelsus@Callandor:~/energyXT/libaam-0.0.2> g++ -m32 -shared -lasound -ljack jack.cpp -o libaam.so

/usr/lib64/gcc/x86_64-suse-linux/4.3/../../../../x86_64-suse-linux/bin/ld:

i386:x86-64 architecture of input file `/usr/lib64/gcc/x86_64-suse-linux/4.3/crtbeginS.o’ is incompatible with i386 output

This is simply due to missing the proper gcc and g++ 32-bit packages – oops. (While I had the necessary 32-bit libs for qt and Jack, I inadvertently had not installed 32-bit gcc and g++ libs, so it indeed was missing the 32-bit version of crtbeginS.o, just like it says.

2) This then allowed libaam.so to build, and you should be able to use the library at this point. You most likely want the configuration interface too, but building xt2-config failed with a missing qmake-qt4, though it was installed. That was corrected with a symlink:

ln -s /usr/lib/qt4/bin/qmake /usr/bin/qmake-qt4

3) The next problem building xt2-config was with it expecting a 32-bit build environment – Note that -m32 is specified in the Makefile for libaam, but NOT in the config/Makefile for xt2-config, thus:

g++ -c -m32 -pipe -g -Wall -W -D_REENTRANT -DQT_GUI_LIB -DQT_CORE_LIB -DQT_SHARED -I/usr/share/qt4/mkspecs/linux-g++ -I. -I/usr/include/QtCore -I/usr/include/QtGui -I/usr/include -I. -I. -o moc_configw.o moc_configw.cpp

g++ -o xt2-config main.o configw.o moc_configw.o -L/usr/lib -lQtGui -L/usr/lib -L/usr/X11R6/lib -lQtCore -lpthread

/usr/lib64/gcc/x86_64-suse-linux/4.3/../../../../x86_64-suse-linux/bin/ld: skipping incompatible /usr/lib/libQtGui.so when searching for -lQtGui

/usr/lib64/gcc/x86_64-suse-linux/4.3/../../../../x86_64-suse-linux/bin/ld: skipping incompatible /usr/lib/libQtGui.so when searching for -lQtGui

/usr/lib64/gcc/x86_64-suse-linux/4.3/../../../../x86_64-suse-linux/bin/ld: skipping incompatible /usr/lib/libQtGui.so when searching for -lQtGui

/usr/lib64/gcc/x86_64-suse-linux/4.3/../../../../x86_64-suse-linux/bin/ld: cannot find -lQtGui

Now, I can see that g++ is not being called with -m32 when it links the three compiled files main.o configw.o moc_configw.o To get this to build correctly I modified the Makefile in config/Makefile (which is called from the Makefile in the build root), and added:

#CFLAGS = -pipe -g -Wall -W -D_REENTRANT $(DEFINES)

CFLAGS = -m32 -march=i386 -pipe -g -Wall -W -D_REENTRANT $(DEFINES)

#CXXFLAGS = -pipe -g -Wall -W -D_REENTRANT $(DEFINES)

CXXFLAGS = -m32 -march=i386 -pipe -g -Wall -W -D_REENTRANT $(DEFINES)

And . . .

####### Build rules

all: Makefile $(TARGET)

$(TARGET): ui_config.h $(OBJECTS)

$(LINK) $(LFLAGS) $(CXXFLAGS) -o $(TARGET) $(OBJECTS) $(OBJCOMP) $(LIBS)

The result is a working libaam.so you can copy to your energyXT folder (backup and replace the existing) which supports Jack, and a working xt2-config (move it from config/ to /usr/bin)

Of course on a 32-bit OS EnergXT2 should just run out of the box (as long as you have downloaded an updated copy) but you still would not have Jack support (which is really the way to go), or the QT config interface, and as more users move to 64-bit and RT kernels for audio applications, this may come in handy.

PHP Bug 53632 Floating Point Arithmetic Issue and Solutions

by admin on Jan.06, 2011, under Linux

Today the above PHP bug was pointed out to me, and in looking into it further I discovered a few interesting things, which I’ve decided to list here. While this bug is serious, a bit of research quickly showed it requires a rather specific combination of PHP version, OS architecture and CPU architecture and feature set, as well as PHP build options.

1) This is completely dependent on an x87 FPU design flaw, wherein 80-bit FP arithmetic is used.

2) Newer processors (x86-64) with SSE2 are IEEE-754 compliant, and use 64-bit FP. So, even if you are running an affected version of PHP (5.3.x or 5.2.x) on a 32-bit OS, if you have an X86-64 CPU you may not in fact be affected (depending on how php was built). Test and see by running a simple test such as:

[user@host ~]$ cat phptest.php

<?php $d = 2.2250738585072011e-308; echo $d + 0; echo “\n”; ?>

[user@host ~]$ php phptest.php

2.2250738585072E-308

3) One work around, if you are affected, is to simple have php.ini preload a simple script to detect to handle requests containing the problematic value, as described here.

4) Alternatively, if you wish to compile your own PHP, adding -mfpmath=sse (or -ffloat-store) to your CFLAGS will also resolve this issue.

While at first this bug may cause a sense that great effort will be needed on a sys admins part to update PHP on many servers, investigation and testing may show that little needs to be done. Of course, new packages for PHP 5.3 will soon be available with will correct the issue as well, but the above may assist you in assessing what actions may need to be taken in the interim.

New Job at Oak Ridge National Laboratory

by admin on Oct.31, 2010, under Linux, My Life, ORNL

I have officially started my new position at Oak Ridge National Laboratory outside of Oak Ridge, Tennessee. I am working in the Information Technology Services Division, which is part of the Computing and Computational Sciences Directorate. My position is that of Unix / Linux system administrator, working primarily in maintaining systems and infrastructure supporting the Climate Change Science Institute.

I have officially started my new position at Oak Ridge National Laboratory outside of Oak Ridge, Tennessee. I am working in the Information Technology Services Division, which is part of the Computing and Computational Sciences Directorate. My position is that of Unix / Linux system administrator, working primarily in maintaining systems and infrastructure supporting the Climate Change Science Institute.

The lab has a number of core focus areas including environmental and climate science, materials science (including new facilities for nanophase research), and is a world leader in neutron science at the Spellation Neutron Source. ORNL is also the home of the National Center for Computational Sciences, and the home of Jaguar and Kraken, currently the first and third most powerful supercomputers in the world. ORNL also supports national security projects, nuclear non-proliferation work, nuclear science and engineering, fusion power and alternative energy sources as well as being a leader in computational biology.

These diverse research areas all typically heavily utilize Linux and Unix for a number of scientific applications and research tools. Of course, the supercomputing center relies on Linux almost exclusively as the Cray systems, and various support clusters, run various distributions of Linux.

The multi-disciplinary environment of the lab makes for a very interesting work environment. I am currently supporting researchers in their work in climate sciences, for example by maintaining the systems used for the ORNL Distributed Active Archive Center (DAAC), and the Atmospheric Radiation Measurement Program (ARM) systems. Additionally, I will be working more with biological science systems as time goes on.

Eventually, I would like to explore my interests in high performance computing, both in the computational needs for Environmental Sciences, and otherwise. I’m increasingly interested in GPU computing, and would like to work with developing and supporting compute environments which use NVIDIA CUDA and OpenCL which can help realize dramatic performance increases in massively parallel computing.

The lab is a world-class facility and is the showcase of our national laboratory system. The campus grounds are quite nice. The lab is surrounded by trees, and is on a huge reservation. The drive to work is beautiful. Here is a picture of the fall colors behind the Joint Institute for Biological Sciences building.

Everyone at the lab has been very easy to work with; in fact, I would have to say the people make up one of the best aspects of working at the lab. It has been a pleasure getting to know my new colleagues and customers.

Our move to Tennessee went very well, and Sydney and I are planning to close on a home up here this month. It’s been a big change for us, but we were very much ready to leave Florida. The changing seasons, smaller urban area, and ready access to the Great Smoky Mountains National Park are much more to our liking than the urban sprawl of Tampa bay. So far we have been on three hikes to the Smoky Mountains and each of them was breathtaking. We once again live someplace where we can truly enjoy the outdoors. (We got a bit spoiled living in Colorado and Alaska!)

Moving up here was a big adventure, but I am happy to say that everything is going very well. The relocation assistance provided by the lab has been tremendously helpful and we are starting to feel very much at home here. I’ll post some pictures of our hikes soon, and do some updates from time to time on what projects I am working with at the Lab and other interesting news.

Mendeley – iTunes for Research, but Better

by admin on Aug.20, 2010, under My Life

While I am not a big iTunes fan, the analogy is at least somewhat accurate and sums up some of the key benefits of Mendeley Desktop quickly. Like iTunes, it provides a client you can use to organize, access and interact with media – in this case research papers rather than music. The cross platform client runs on Linux, OS X and Windows and can sync your research library with on-line storage you can access anywhere. Some of the key features I like are:

Features

* Search your entire library of papers very quickly. Make notes, and define tags, for papers which stay with them and can also be searched. Searching within dozens of PDFs seems to execute very fast.

* Automatic retrieval of document information based on analysis of metadata in the paper. Mendeley will scan the paper, identify the author and name of the paper, and if it finds a DOI or PMID publication catalog number it can then pull in additional metadata for the paper for you – all very painlessly. Very cool.

* Allows you to create collections which can be shared publicly (I’ve not used this feature yet.)

* On-line library of thousands of paper to browse and easily download. Note: Unlike iTunes, Mendeley is not a publishing agent per say – they simply point you to where the research can be found. Thus you may get directed to sites like the ACM or IEEE digital libraries, or various sites for medical research, which require an account to access. To make maximum use of Mendeley you may want to find out if your company or school has an account which provides access to these publications. (There are many papers available however which do not require this additional access.)

* The web site presents the current, mostly highly read papers, organized by discipline, which makes finding interesting things to read very simple.

* Web importer: A Javascript bookmarklet that imports documents you find on-line, and associated .PDFs if possible, into your library.

* Provides social network opportunities by searching for other Mendeley users, viewing their publications and bio information, and contacting them through Mendeley.

* One of the best features is integration with OpenOffice / MS Office which makes adding citations a snap. Choose your citation format and use the plug-in for your Office Suite and you can painlessly add citations with abandon. Oh the glory.

In addition to downloading the desktop client, I recommend reading the Getting Started Guide which will orient you on how to add existing papers you may have into Mendeley and how the metadata analysis works. It also shows how to use the OpenOffice plug-in for citing works.

Note that if you wish to synchronize your PDFs with your on-line account, you must enable this. Use the “Edit Settings” button (near the middle center of the tool bar).

You can add existing PDF simply by dragging and dropping them into Mendeley. This places them in a “needs reviewed” status during which you can have Mendeley search online for additional information. (It does this automatically, but if you need to do so manually: Right click on the document, select “mark as” and choose “Needs Review”. A dialog will now appear asking if you want to try automatically searching.)

Now, just start browsing for papers at the Mendeley site and use the bookmarklet when you are browseing to import items into your Mendeley.

Happy reading!

NVIDIA Cuda and VirtualGL

by admin on Aug.08, 2010, under Linux, My Life

(Update: I’ve written the Wiki now and you can find it here.)

So I’ve been playing around with NVIDIA’s CUDA Toolkit and SDK and reading this book that just came out “CUDA by Example”. (See this Linux Journal article for some general back ground on CUDA.)

This is pretty interesting (and fun) stuff. So far I’ve gotten the toolkit installed and compiled the sample code projects in the SDK. I have to use my workstation at work as (sadly) my ancient ass NVIDIA card at home is not CUDA capable and my system is so old it is really just a type writer at this point.

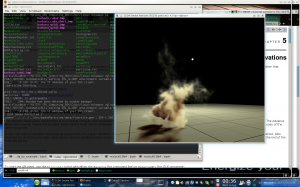

Running just the sample CUDA projects is pretty impressive though. My old school GeForce 8800 at work has a meager 32 cores. (The card I just ordered has 96, and the most recent Fermi architecture ones have 448 and 6GB – yea, holy crap.) However, with only 32 cores it renders the particle, smoke and other samples quite nicely. These are rendered in 3D, allowing you to change perspective, and sometimes alter the particle density, light source, etc. They are just examples showing how to run general purpose code (and render results in OpenGL) on the GPU.

So then I thought – I would like to forward the rendered OpenGL images to my workstation at home. Alas, SSH, VNC, et. al all actually send the OpenGL to the client to be rendered. Enter VirtualGL, which does just what you would think – it attaches a loadable module to an executable which intercepts OpenGL requests and send them to the local GPU for rendering, putting the results into a pixel buffer which then gets sent to the client. Wow. You can run your rendering in a data center and forward the results anywhere. That’s nifty!

Here is a picture of the CUDA smoke particles sample, being rendered at work, and forwarded via SSH X Forwarding with rendering handled by VirtualGL. (I think Geek Level just dinged.)

I’m going to write up a wiki on this stuff, but if you have any recent NVIDIA card (8800 or newer) , even a mobile one, you likely have the capability to run CUDA. If you are interested you can download the Toolkit and SDK and have at it. (Update: I’ve actually written the Wiki now and you can find it here.

After testing the CUDA waters I have to say I am very impressed. I can easily see CUDA being integrated into many applications in the near future. There are already plugins to offload MS Excel formulas onto the GPU, run Mathmatica on it, etc. The integration of multi core CPUs, in even consumer class hardware, combined with the capabilities of GPUs very well might make things go from a “multi core revolution” in consumer computers and into a “massively parallel revolution”. In fact, it’s already happened. Massively parallel computing is now capable on your notebook, it just has not been fully exploited yet. (Think of the cryptographic fun you could have.) Of course once Apple catches on, it will be called iMassive.

This is just all pretty fascinating to me. My first computers had 4Mhz Motorola CPUs, 32K of memory, etc. and now I just bought a 98 core GPU which I can write code in CUDA C and run general purpose computations on. Wow. And I’m getting ready to get an i7 too – it’s all just a bit dizzying. But very fun.

If you have not checked out CUDA, I highly recommend it. It’s a good opportunity to learn more about massively parallel computing, which I think we are going to be seeing a whole lot more of. You can easily find the book “CUDA by Example” It requires basic to intermed iate C skills, but not OpenGL or other graphics languages at all – you learn CUDA C as you go and start writing parallel code very quickly.(Amazingly, the run time just integrates the GPU device code and host code, and you can invoke code to run on the GPU as easily as any C function. It is some hot stuff behind the scenes, but surprisingly easy to code.)