NVIDIA Cuda and VirtualGL

by admin on Aug.08, 2010, under Linux, My Life

(Update: I’ve written the Wiki now and you can find it here.)

So I’ve been playing around with NVIDIA’s CUDA Toolkit and SDK and reading this book that just came out “CUDA by Example”. (See this Linux Journal article for some general back ground on CUDA.)

This is pretty interesting (and fun) stuff. So far I’ve gotten the toolkit installed and compiled the sample code projects in the SDK. I have to use my workstation at work as (sadly) my ancient ass NVIDIA card at home is not CUDA capable and my system is so old it is really just a type writer at this point.

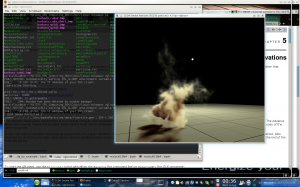

Running just the sample CUDA projects is pretty impressive though. My old school GeForce 8800 at work has a meager 32 cores. (The card I just ordered has 96, and the most recent Fermi architecture ones have 448 and 6GB – yea, holy crap.) However, with only 32 cores it renders the particle, smoke and other samples quite nicely. These are rendered in 3D, allowing you to change perspective, and sometimes alter the particle density, light source, etc. They are just examples showing how to run general purpose code (and render results in OpenGL) on the GPU.

So then I thought – I would like to forward the rendered OpenGL images to my workstation at home. Alas, SSH, VNC, et. al all actually send the OpenGL to the client to be rendered. Enter VirtualGL, which does just what you would think – it attaches a loadable module to an executable which intercepts OpenGL requests and send them to the local GPU for rendering, putting the results into a pixel buffer which then gets sent to the client. Wow. You can run your rendering in a data center and forward the results anywhere. That’s nifty!

Here is a picture of the CUDA smoke particles sample, being rendered at work, and forwarded via SSH X Forwarding with rendering handled by VirtualGL. (I think Geek Level just dinged.)

I’m going to write up a wiki on this stuff, but if you have any recent NVIDIA card (8800 or newer) , even a mobile one, you likely have the capability to run CUDA. If you are interested you can download the Toolkit and SDK and have at it. (Update: I’ve actually written the Wiki now and you can find it here.

After testing the CUDA waters I have to say I am very impressed. I can easily see CUDA being integrated into many applications in the near future. There are already plugins to offload MS Excel formulas onto the GPU, run Mathmatica on it, etc. The integration of multi core CPUs, in even consumer class hardware, combined with the capabilities of GPUs very well might make things go from a “multi core revolution” in consumer computers and into a “massively parallel revolution”. In fact, it’s already happened. Massively parallel computing is now capable on your notebook, it just has not been fully exploited yet. (Think of the cryptographic fun you could have.) Of course once Apple catches on, it will be called iMassive.

This is just all pretty fascinating to me. My first computers had 4Mhz Motorola CPUs, 32K of memory, etc. and now I just bought a 98 core GPU which I can write code in CUDA C and run general purpose computations on. Wow. And I’m getting ready to get an i7 too – it’s all just a bit dizzying. But very fun.

If you have not checked out CUDA, I highly recommend it. It’s a good opportunity to learn more about massively parallel computing, which I think we are going to be seeing a whole lot more of. You can easily find the book “CUDA by Example” It requires basic to intermed iate C skills, but not OpenGL or other graphics languages at all – you learn CUDA C as you go and start writing parallel code very quickly.(Amazingly, the run time just integrates the GPU device code and host code, and you can invoke code to run on the GPU as easily as any C function. It is some hot stuff behind the scenes, but surprisingly easy to code.)

Leave a Reply

You must be logged in to post a comment.

August 8th, 2010 on 8:30 am

[…] […]

September 1st, 2010 on 7:24 pm

Nice – I was actually looking for a solution like VirtualGL about a year ago to do exactly this! Thanks for pointing me to the solution – I know I’ll need it someday soon again.

September 3rd, 2010 on 10:40 pm

Great! I am glad you found it useful. I hope the VirtualGL project continues to get donations to stay afloat. It is a niche application, but certainly does come in handy.

December 5th, 2012 on 8:01 am

Hi,

I’m interested in how to forward a rendering through the X11 ssh forwarding.

Have you already made a wiki for it?

If you have any advice, please.

(Now, it opens the frame on X11 window but it closes fairly short time.)

December 5th, 2012 on 12:29 pm

Hi there,

I have (a little) additional information on this CUDA wiki page at: http://timelordz.com/wiki/Nvidia_CUDA#VirtualGL

I’ve updated the old Sun VirtualGL documentation link to the Oracle site, which may have some useful information for you. http://docs.oracle.com/cd/E19279-01/820-3257-12/VGL.html

Also, ensure you complete the steps to configure X given in the documentation: VirtualGL-2.3.2/doc/unixconfig.txt and checkout the Nvidia forum link above.

Honestly, I’ve not needed to use this solution since my first tests with it, so there may have been some changes.

Also, if the server has another (non-compute) graphics card you might explore using NXServer and connecting the to remote desktop via NXclient. NX provides much faster connectivity compared to VNC, and I have used it for other apps which use OpenGL such as Visit, Matlab, etc. though I’ve not actually tested it with CUDA and OpenGL. The Nvidia forums seem to suggest this as an alternative. Of course if you only have one GPU on the compute node, or no desktop environment on it than this option is out.